In celebration of AI Month, we are thrilled to extend our congratulations to Andrew Zhu, under the guidance of Chris Callison-Burch, for his remarkable achievement in receiving the prestigious 2024 NSF Graduate Research Fellowship Program (GRFP). Andrew’s dedication and innovative vision have earned him this esteemed recognition, marking a significant milestone in his academic journey.

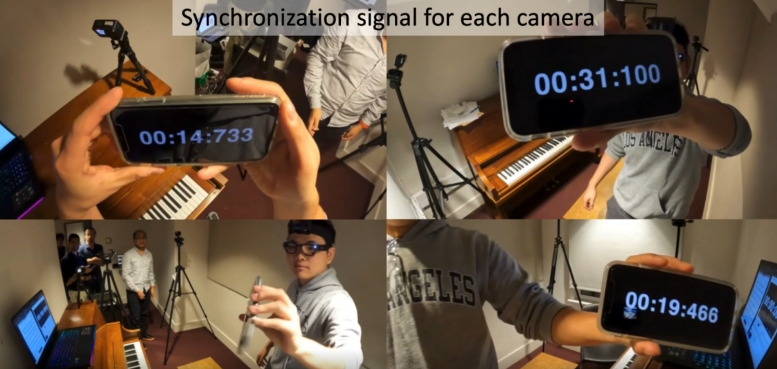

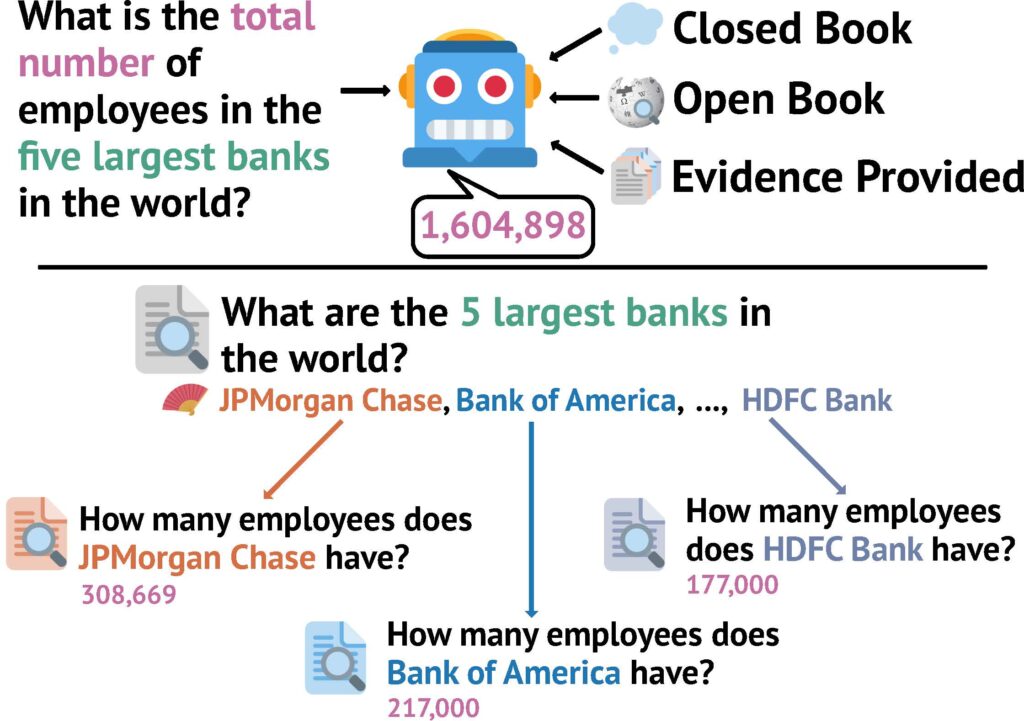

Andrew’s research endeavors are poised to address a critical challenge in the realm of Language Model Machines or Large Language Models (LLMs), where despite their formidable capabilities, they often grapple with the issue of ‘forgetting’ when confronted with complex inquiries or tasks spanning extensive timelines. For instance, while humans effortlessly navigate through multi-step queries, LLMs encounter hurdles in retaining the original context across successive stages of information retrieval. Inspired by this gap, Andrew’s previous work, FanOutQA, shed light on the limitations of current models, showcasing the vast barrier between human accuracy and machine performance.

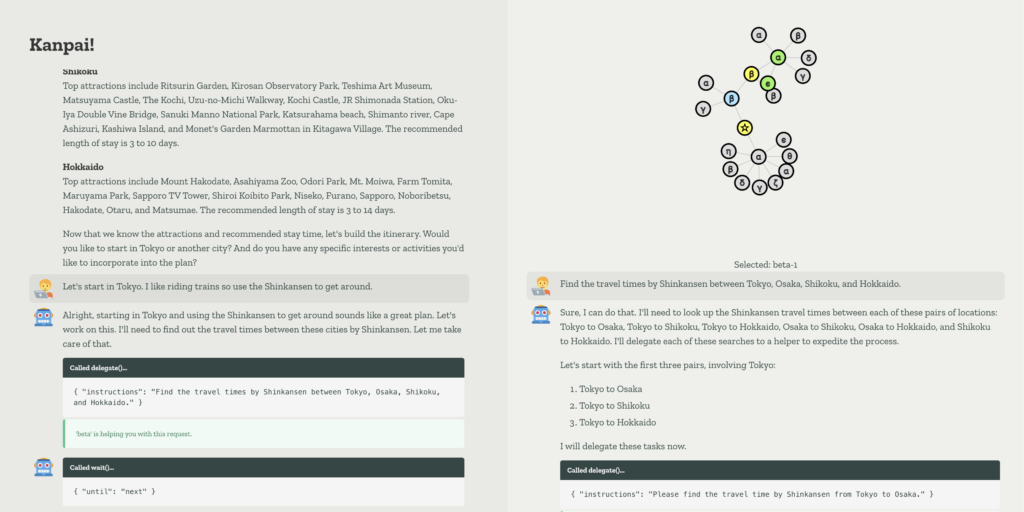

Central to Andrew’s proposed solution is the concept of recursive delegation, a groundbreaking approach aimed at endowing LLMs with the ability to allocate intricate tasks to subordinate LLMs. This hierarchical structure allows for the decomposition of complex problems into more manageable segments, with each ‘child’ LLM equipped to handle its designated subtask. Through this cascading delegation, Andrew envisions a synergistic collaboration among LLMs, comparable to a relay race where each participant contributes to achieving the final objective.

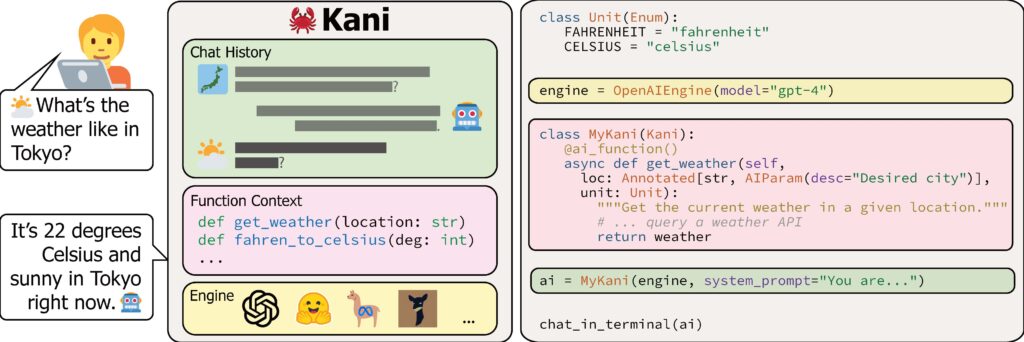

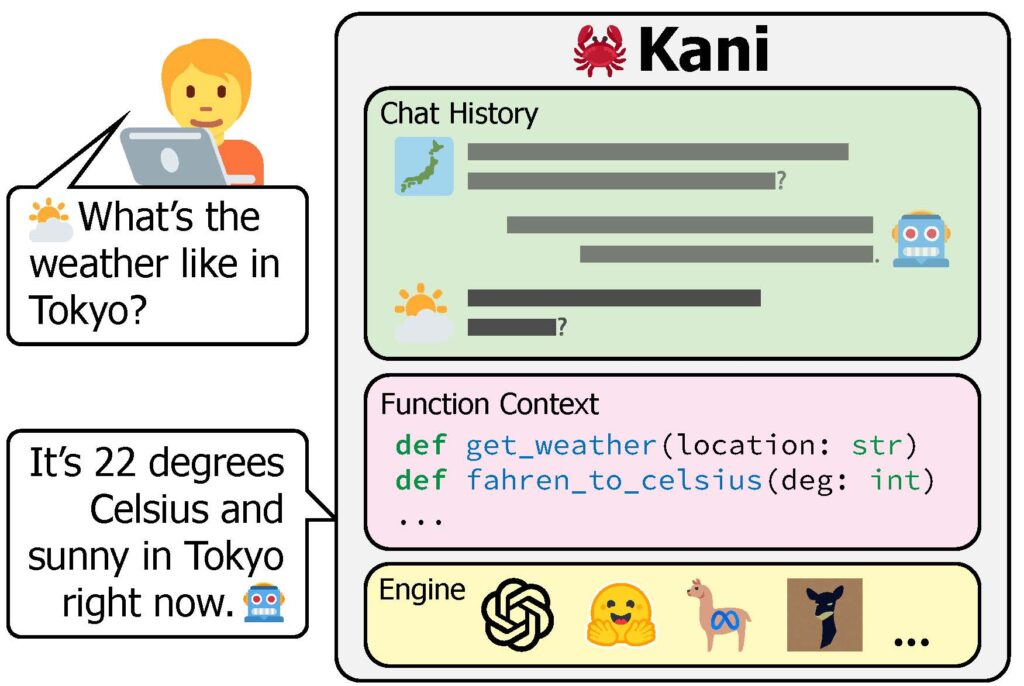

In laying the groundwork for his research, Andrew has developed Kani, a versatile framework designed to facilitate seamless interaction between LLMs and human-written code. This innovative tool empowers researchers to experiment with different LLM architectures while interfacing with Python functions effortlessly. By democratizing access to such tools, Andrew aims to catalyze progress in the field and foster a collaborative ecosystem of exploration and discovery.

Andrew’s advisor, Chris Callison-Burch, effectively summarizes the significance of his work, stating,

“Andrew Zhu’s research is at the cutting edge of programming languages, natural language processing, and artificial intelligence. His groundbreaking open-source software package, Kani, elegantly solves a major weakness of large language models (LLMs) like ChatGPT by enabling them to write code and call functions. Kani seamlessly integrates LLMs into Python programs, allowing developers to write functions that can be called by the language model. This game-changing approach combines the strengths of AI and traditional programming, enabling LLMs to interweave natural language generation with complex computations.

Andrew’s work has the potential to revolutionize how we develop AI systems, paving the way for more powerful and versatile AI applications that go beyond ChatGPT’s current abilities of understanding and generating natural language to also include the abilities to create and manipulate code.”

Chris Callison-Burch

As Andrew embarks on this ambitious journey, his work holds the promise of transforming the landscape of artificial intelligence, ushering in a new era of collaboration and problem-solving. We eagerly anticipate the insights and advancements that will emerge from his pioneering efforts, underscoring the transformative potential of AI in shaping our collective future. Once again, congratulations to Andrew Zhu on this well-deserved recognition, and here’s to a future marked by innovation, collaboration, and boundless possibilities in the realm of AI.

References:

[1] Andrew Zhu, Alyssa Hwang, Liam Dugan, and Chris Callison-Burch. 2024. FanOutQA: Multi-Hop, Multi-Document Question Answering for Large Language Models. Arxiv preprint, in submission at ACL 2024. https://arxiv.org/abs/2402.14116

[2] Andrew Zhu, Liam Dugan, Alyssa Hwang, and Chris Callison-Burch. 2023. Kani: A Lightweight and Highly Hackable Framework for Building Language Model Applications. In Proceedings of the 3rd Workshop for Natural Language Processing Open Source Software (NLP-OSS 2023), pages 65–77, Singapore. Association for Computational Linguistics. https://aclanthology.org/2023.nlposs-1.8/